|

TridiagLU

1.0

Scalable, parallel solver for tridiagonal system of equations

|

|

TridiagLU

1.0

Scalable, parallel solver for tridiagonal system of equations

|

Solve tridiagonal systems of equation using parallel LU decomposition. More...

#include <stdlib.h>#include <stdio.h>#include <sys/time.h>#include <string.h>#include <mpi.h>#include <tridiagLU.h>Go to the source code of this file.

Functions | |

| int | tridiagLU (double *a, double *b, double *c, double *x, int n, int ns, void *r, void *m) |

Solve tridiagonal systems of equation using parallel LU decomposition.

Definition in file tridiagLU.c.

| int tridiagLU | ( | double * | a, |

| double * | b, | ||

| double * | c, | ||

| double * | x, | ||

| int | n, | ||

| int | ns, | ||

| void * | r, | ||

| void * | m | ||

| ) |

Solve tridiagonal (non-periodic) systems of equations using parallel LU decomposition: This function can solve multiple independent systems with one call. The systems need not share the same left- or right-hand-sides. The iterative substructuring method is used in this function that can be briefly described through the following 4 stages:

Specific details of the method implemented here are available in:

More references on this class of parallel tridiagonal solvers:

Array layout: The arguments a, b, c, and x are local 1D arrays (containing this processor's part of the subdiagonal, diagonal, superdiagonal, and right-hand-side) of size (n X ns), where n is the local size of the system, and ns is the number of independent systems to solve. The ordering of the elements in these arrays is as follows:

For example, consider the following systems:

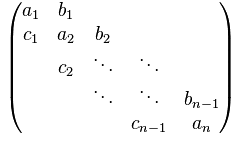

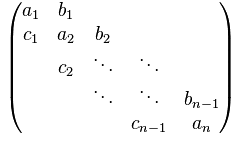

\begin{equation} \left[\begin{array}{ccccc} b_0^k & c_0^k & & & \\ a_1^k & b_1^k & c_1^k & & \\ & a_2^k & b_2^k & c_2^k & & \\ & & a_3^k & b_3^k & c_3^k & \\ & & & a_4^k & b_4^k & c_4^k \\ \end{array}\right] \left[\begin{array}{c} x_0^k \\ x_1^k \\ x_2^k \\ x_3^k \\ x_4^k \end{array}\right] = \left[\begin{array}{c} r_0^k \\ r_1^k \\ r_2^k \\ r_3^k \\ r_4^k \end{array}\right]; \ \ k= 1,\cdots,ns \end{equation}

and let \( ns = 3\). Note that in the code, \(x\) and \(r\) are the same array x.

Then, the array b must be a 1D array with the following layout of elements:

[

b_0^0, b_0^1, b_0^2, (diagonal element of the first row in each system)

b_1^0, b_1^1, b_1^2, (diagonal element of the second row in each system)

...,

b_{n-1}^0, b_{n-1}^1, b_{n-1}^2 (diagonal element of the last row in each system)

]

The arrays a, c, and x are stored similarly.

Notes:

| a | Array containing the sub-diagonal elements |

| b | Array containing the diagonal elements |

| c | Array containing the super-diagonal elements |

| x | Right-hand side; will contain the solution on exit |

| n | Local size of the system on this processor |

| ns | Number of systems to solve |

| r | Object of type TridiagLU |

| m | MPI communicator |

Definition at line 83 of file tridiagLU.c.