|

TridiagLU

1.0

Scalable, parallel solver for tridiagonal system of equations

|

|

TridiagLU

1.0

Scalable, parallel solver for tridiagonal system of equations

|

Header file for TridiagLU. More...

Go to the source code of this file.

Data Structures | |

| struct | TridiagLU |

Macros | |

| #define | _TRIDIAG_JACOBI_ "jacobi" |

| #define | _TRIDIAG_GS_ "gather-and-solve" |

Functions | |

| int | tridiagLU (double *, double *, double *, double *, int, int, void *, void *) |

| int | tridiagLUGS (double *, double *, double *, double *, int, int, void *, void *) |

| int | tridiagIterJacobi (double *, double *, double *, double *, int, int, void *, void *) |

| int | tridiagLUInit (void *, void *) |

| int | blocktridiagLU (double *, double *, double *, double *, int, int, int, void *, void *) |

| int | blocktridiagIterJacobi (double *, double *, double *, double *, int, int, int, void *, void *) |

| int | tridiagScaLPK (double *, double *, double *, double *, int, int, void *, void *) |

Header file for TridiagLU.

Definition in file tridiagLU.h.

| struct TridiagLU |

Definition at line 81 of file tridiagLU.h.

| Data Fields | ||

|---|---|---|

| char | reducedsolvetype[50] |

Choice of solver for solving the reduced system. May be _TRIDIAG_JACOBI_ or _TRIDIAG_GS_. |

| int | evaluate_norm |

calculate norm at each iteration? (relevant only for iterative solvers) |

| int | maxiter |

maximum number of iterations (relevant only for iterative solvers) |

| double | atol |

absolute tolerance (relevant only for iterative solvers) |

| double | rtol |

relative tolerace (relevant only for iterative solvers) |

| int | exititer |

number of iterations it ran for (relevant only for iterative solvers) |

| double | exitnorm |

error norm at exit (relevant only for iterative solvers) |

| int | verbose |

print iterations and norms (relevant only for iterative solvers) |

| double | total_time |

Total wall time in seconds |

| double | stage1_time |

Wall time (in seconds) for stage 1 of tridiagLU() or blocktridiagLU() |

| double | stage2_time |

Wall time (in seconds) for stage 2 of tridiagLU() or blocktridiagLU() |

| double | stage3_time |

Wall time (in seconds) for stage 3 of tridiagLU() or blocktridiagLU() |

| double | stage4_time |

Wall time (in seconds) for stage 4 of tridiagLU() or blocktridiagLU() |

| int | blacs_ctxt |

Context variable for ScaLAPACK (relevant if compiled with ScaLAPACK support (-Dwith_scalapack)

|

| #define _TRIDIAG_JACOBI_ "jacobi" |

Jacobi method

Definition at line 71 of file tridiagLU.h.

| #define _TRIDIAG_GS_ "gather-and-solve" |

| int tridiagLU | ( | double * | a, |

| double * | b, | ||

| double * | c, | ||

| double * | x, | ||

| int | n, | ||

| int | ns, | ||

| void * | r, | ||

| void * | m | ||

| ) |

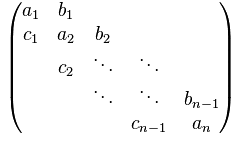

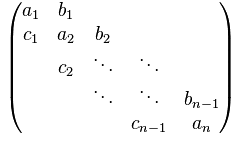

Solve tridiagonal (non-periodic) systems of equations using parallel LU decomposition: This function can solve multiple independent systems with one call. The systems need not share the same left- or right-hand-sides. The iterative substructuring method is used in this function that can be briefly described through the following 4 stages:

Specific details of the method implemented here are available in:

More references on this class of parallel tridiagonal solvers:

Array layout: The arguments a, b, c, and x are local 1D arrays (containing this processor's part of the subdiagonal, diagonal, superdiagonal, and right-hand-side) of size (n X ns), where n is the local size of the system, and ns is the number of independent systems to solve. The ordering of the elements in these arrays is as follows:

For example, consider the following systems:

\begin{equation} \left[\begin{array}{ccccc} b_0^k & c_0^k & & & \\ a_1^k & b_1^k & c_1^k & & \\ & a_2^k & b_2^k & c_2^k & & \\ & & a_3^k & b_3^k & c_3^k & \\ & & & a_4^k & b_4^k & c_4^k \\ \end{array}\right] \left[\begin{array}{c} x_0^k \\ x_1^k \\ x_2^k \\ x_3^k \\ x_4^k \end{array}\right] = \left[\begin{array}{c} r_0^k \\ r_1^k \\ r_2^k \\ r_3^k \\ r_4^k \end{array}\right]; \ \ k= 1,\cdots,ns \end{equation}

and let \( ns = 3\). Note that in the code, \(x\) and \(r\) are the same array x.

Then, the array b must be a 1D array with the following layout of elements:

[

b_0^0, b_0^1, b_0^2, (diagonal element of the first row in each system)

b_1^0, b_1^1, b_1^2, (diagonal element of the second row in each system)

...,

b_{n-1}^0, b_{n-1}^1, b_{n-1}^2 (diagonal element of the last row in each system)

]

The arrays a, c, and x are stored similarly.

Notes:

| a | Array containing the sub-diagonal elements |

| b | Array containing the diagonal elements |

| c | Array containing the super-diagonal elements |

| x | Right-hand side; will contain the solution on exit |

| n | Local size of the system on this processor |

| ns | Number of systems to solve |

| r | Object of type TridiagLU |

| m | MPI communicator |

Definition at line 83 of file tridiagLU.c.

| int tridiagLUGS | ( | double * | a, |

| double * | b, | ||

| double * | c, | ||

| double * | x, | ||

| int | n, | ||

| int | ns, | ||

| void * | r, | ||

| void * | m | ||

| ) |

Solve tridiagonal (non-periodic) systems of equations using the gather-and-solve approach: This function can solve multiple independent systems with one call. The systems need not share the same left- or right-hand-sides. The "gather-and-solve" approach gathers a tridiagonal system on one processor and solves it using tridiagLU() (sending NULL as the argument for MPI communicator to indicate that a serial solution is desired). Given multiple tridiagonal systems (ns > 1), this function will gather the systems on different processors in an optimal way, and thus each processor will solve some of the systems. After the system(s) is (are) solved, the solution(s) is (are) scattered back to the original processors.

Array layout: The arguments a, b, c, and x are local 1D arrays (containing this processor's part of the subdiagonal, diagonal, superdiagonal, and right-hand-side) of size (n X ns), where n is the local size of the system, and ns is the number of independent systems to solve. The ordering of the elements in these arrays is as follows:

For example, consider the following systems:

\begin{equation} \left[\begin{array}{ccccc} b_0^k & c_0^k & & & \\ a_1^k & b_1^k & c_1^k & & \\ & a_2^k & b_2^k & c_2^k & & \\ & & a_3^k & b_3^k & c_3^k & \\ & & & a_4^k & b_4^k & c_4^k \\ \end{array}\right] \left[\begin{array}{c} x_0^k \\ x_1^k \\ x_2^k \\ x_3^k \\ x_4^k \end{array}\right] = \left[\begin{array}{c} r_0^k \\ r_1^k \\ r_2^k \\ r_3^k \\ r_4^k \end{array}\right]; \ \ k= 1,\cdots,ns \end{equation}

and let \( ns = 3\). Note that in the code, \(x\) and \(r\) are the same array x.

Then, the array b must be a 1D array with the following layout of elements:

[

b_0^0, b_0^1, b_0^2, (diagonal element of the first row in each system)

b_1^0, b_1^1, b_1^2, (diagonal element of the second row in each system)

...,

b_{n-1}^0, b_{n-1}^1, b_{n-1}^2 (diagonal element of the last row in each system)

]

The arrays a, c, and x are stored similarly.

Notes:

| a | Array containing the sub-diagonal elements |

| b | Array containing the diagonal elements |

| c | Array containing the super-diagonal elements |

| x | Right-hand side; will contain the solution on exit |

| n | Local size of the system on this processor |

| ns | Number of systems to solve |

| r | Object of type TridiagLU |

| m | MPI communicator |

Definition at line 64 of file tridiagLUGS.c.

| int tridiagIterJacobi | ( | double * | a, |

| double * | b, | ||

| double * | c, | ||

| double * | x, | ||

| int | n, | ||

| int | ns, | ||

| void * | r, | ||

| void * | m | ||

| ) |

Solve tridiagonal (non-periodic) systems of equations using point Jacobi iterations: This function can solve multiple independent systems with one call. The systems need not share the same left- or right-hand-sides. The initial guess is taken as the solution of

\begin{equation} {\rm diag}\left[{\bf b}\right]{\bf x} = {\bf r} \end{equation}

where \({\bf b}\) represents the diagonal elements of the tridiagonal system, and \({\bf r}\) is the right-hand-side, stored in \({\bf x}\) at the start of this function.

Array layout: The arguments a, b, c, and x are local 1D arrays (containing this processor's part of the subdiagonal, diagonal, superdiagonal, and right-hand-side) of size (n X ns), where n is the local size of the system, and ns is the number of independent systems to solve. The ordering of the elements in these arrays is as follows:

For example, consider the following systems:

\begin{equation} \left[\begin{array}{ccccc} b_0^k & c_0^k & & & \\ a_1^k & b_1^k & c_1^k & & \\ & a_2^k & b_2^k & c_2^k & & \\ & & a_3^k & b_3^k & c_3^k & \\ & & & a_4^k & b_4^k & c_4^k \\ \end{array}\right] \left[\begin{array}{c} x_0^k \\ x_1^k \\ x_2^k \\ x_3^k \\ x_4^k \end{array}\right] = \left[\begin{array}{c} r_0^k \\ r_1^k \\ r_2^k \\ r_3^k \\ r_4^k \end{array}\right]; \ \ k= 1,\cdots,ns \end{equation}

and let \( ns = 3\). Note that in the code, \(x\) and \(r\) are the same array x.

Then, the array b must be a 1D array with the following layout of elements:

[

b_0^0, b_0^1, b_0^2, (diagonal element of the first row in each system)

b_1^0, b_1^1, b_1^2, (diagonal element of the second row in each system)

...,

b_{n-1}^0, b_{n-1}^1, b_{n-1}^2 (diagonal element of the last row in each system)

]

The arrays a, c, and x are stored similarly.

Notes:

| a | Array containing the sub-diagonal elements |

| b | Array containing the diagonal elements |

| c | Array containing the super-diagonal elements |

| x | Right-hand side; will contain the solution on exit |

| n | Local size of the system on this processor |

| ns | Number of systems to solve |

| r | Object of type TridiagLU |

| m | MPI communicator |

Definition at line 64 of file tridiagIterJacobi.c.

| int tridiagLUInit | ( | void * | r, |

| void * | c | ||

| ) |

Initialize the tridiagLU solver by setting the various parameters in TridiagLU to their default values. If the file lusolver.inp is available, read it and set the parameters.

The file lusolver.inp must be in the following format:

begin

<keyword> <value>

<keyword> <value>

<keyword> <value>

...

<keyword> <value>

end

where the list of keywords are:

| Keyword name | Type | Variable | Default value |

|---|---|---|---|

| evaluate_norm | int | TridiagLU::evaluate_norm | 1 |

| maxiter | int | TridiagLU::maxiter | 10 |

| atol | double | TridiagLU::atol | 1e-12 |

| rtol | double | TridiagLU::rtol | 1e-10 |

| verbose | int | TridiagLU::verbose | 0 |

| reducedsolvetype | char[] | TridiagLU::reducedsolvetype | _TRIDIAG_JACOBI_ |

| r | Object of type TridiagLU |

| c | MPI communicator |

Definition at line 39 of file tridiagLUInit.c.

| int blocktridiagLU | ( | double * | a, |

| double * | b, | ||

| double * | c, | ||

| double * | x, | ||

| int | n, | ||

| int | ns, | ||

| int | bs, | ||

| void * | r, | ||

| void * | m | ||

| ) |

Solve block tridiagonal (non-periodic) systems of equations using parallel LU decomposition: This function can solve multiple independent systems with one call. The systems need not share the same left- or right-hand-sides. The iterative substructuring method is used in this function that can be briefly described through the following 4 stages:

Specific details of the method implemented here are available in:

More references on this class of parallel tridiagonal solvers:

Array layout: The arguments a, b, and c are local 1D arrays (containing this processor's part of the subdiagonal, diagonal, and superdiagonal) of size (n X ns X bs^2), and x is a local 1D array (containing this processor's part of the right-hand-side, and will contain the solution on exit) of size (n X ns X bs), where n is the local size of the system, ns is the number of independent systems to solve, and bs is the block size. The ordering of the elements in these arrays is as follows:

For example, consider the following systems:

\begin{equation} \left[\begin{array}{ccccc} B_0^k & C_0^k & & & \\ A_1^k & B_1^k & C_1^k & & \\ & A_2^k & B_2^k & C_2^k & & \\ & & A_3^k & B_3^k & C_3^k & \\ & & & A_4^k & B_4^k & C_4^k \\ \end{array}\right] \left[\begin{array}{c} X_0^k \\ X_1^k \\ X_2^k \\ X_3^k \\ X_4^k \end{array}\right] = \left[\begin{array}{c} R_0^k \\ R_1^k \\ R_2^k \\ R_3^k \\ R_4^k \end{array}\right]; \ \ k= 1,\cdots,ns \end{equation}

where \(A\), \(B\), and \(C\) are matrices of size bs = 2 (say), and let \( ns = 3\). In the equation above, we have

\begin{equation} B_i^k = \left[\begin{array}{cc} b_{00,i}^k & b_{01,i}^k \\ b_{10,i}^k & b_{11,i}^k \end{array}\right], X_i^k = \left[\begin{array}{c} x_{0,i}^k \\ x_{1,i}^k \end{array} \right], R_i^k = \left[\begin{array}{c} r_{0,i}^k \\ r_{1,i}^k \end{array} \right] \end{equation}

Note that in the code, \(X\) and \(R\) are the same array x.

Then, the array b must be a 1D array with the following layout of elements:

[

b_{00,0}^0, b_{01,0}^0, b_{10,0}^0, b_{11,0}^0, b_{00,0}^1, b_{01,0}^1, b_{10,0}^1, b_{11,0}^1, b_{00,0}^2, b_{01,0}^2, b_{10,0}^2, b_{11,0}^2,

b_{00,1}^0, b_{01,1}^0, b_{10,1}^0, b_{11,1}^0, b_{00,1}^1, b_{01,1}^1, b_{10,1}^1, b_{11,1}^1, b_{00,1}^2, b_{01,1}^2, b_{10,1}^2, b_{11,1}^2,

...,

b_{00,n-1}^0, b_{01,n-1}^0, b_{10,n-1}^0, b_{11,n-1}^0, b_{00,n-1}^1, b_{01,n-1}^1, b_{10,n-1}^1, b_{11,n-1}^1, b_{00,n-1}^2, b_{01,n-1}^2, b_{10,n-1}^2, b_{11,n-1}^2

]

The arrays a and c are stored similarly.

The array corresponding to a vector (the solution and the right-hand-side x) must be a 1D array with the following layout of elements:

[

x_{0,0}^0, x_{1,0}^0, x_{0,0}^1, x_{1,0}^1,x_{0,0}^2, x_{1,0}^2,

x_{0,1}^0, x_{1,1}^0, x_{0,1}^1, x_{1,1}^1,x_{0,1}^2, x_{1,1}^2,

...,

x_{0,n-1}^0, x_{1,n-1}^0, x_{0,n-1}^1, x_{1,n-1}^1,x_{0,n-1}^2, x_{1,n-1}^2

]

Notes:

| a | Array containing the sub-diagonal elements |

| b | Array containing the diagonal elements |

| c | Array containing the super-diagonal elements |

| x | Right-hand side; will contain the solution on exit |

| n | Local size of the system on this processor (not multiplied by the block size) |

| ns | Number of systems to solve |

| bs | Block size |

| r | Object of type TridiagLU (contains wall times at exit) |

| m | MPI communicator |

Definition at line 107 of file blocktridiagLU.c.

| int blocktridiagIterJacobi | ( | double * | a, |

| double * | b, | ||

| double * | c, | ||

| double * | x, | ||

| int | n, | ||

| int | ns, | ||

| int | bs, | ||

| void * | r, | ||

| void * | m | ||

| ) |

Solve block tridiagonal (non-periodic) systems of equations using point Jacobi iterations: This function can solve multiple independent systems with one call. The systems need not share the same left- or right-hand-sides. The initial guess is taken as the solution of

\begin{equation} {\rm diag}\left[{\bf b}\right]{\bf x} = {\bf r} \end{equation}

where \({\bf b}\) represents the diagonal elements of the tridiagonal system, and \({\bf r}\) is the right-hand-side, stored in \({\bf x}\) at the start of this function.

Array layout: The arguments a, b, and c are local 1D arrays (containing this processor's part of the subdiagonal, diagonal, and superdiagonal) of size (n X ns X bs^2), and x is a local 1D array (containing this processor's part of the right-hand-side, and will contain the solution on exit) of size (n X ns X bs), where n is the local size of the system, ns is the number of independent systems to solve, and bs is the block size. The ordering of the elements in these arrays is as follows:

For example, consider the following systems:

\begin{equation} \left[\begin{array}{ccccc} B_0^k & C_0^k & & & \\ A_1^k & B_1^k & C_1^k & & \\ & A_2^k & B_2^k & C_2^k & & \\ & & A_3^k & B_3^k & C_3^k & \\ & & & A_4^k & B_4^k & C_4^k \\ \end{array}\right] \left[\begin{array}{c} X_0^k \\ X_1^k \\ X_2^k \\ X_3^k \\ X_4^k \end{array}\right] = \left[\begin{array}{c} R_0^k \\ R_1^k \\ R_2^k \\ R_3^k \\ R_4^k \end{array}\right]; \ \ k= 1,\cdots,ns \end{equation}

where \(A\), \(B\), and \(C\) are matrices of size bs = 2 (say), and let \( ns = 3\). In the equation above, we have

\begin{equation} B_i^k = \left[\begin{array}{cc} b_{00,i}^k & b_{01,i}^k \\ b_{10,i}^k & b_{11,i}^k \end{array}\right], X_i^k = \left[\begin{array}{c} x_{0,i}^k \\ x_{1,i}^k \end{array} \right], R_i^k = \left[\begin{array}{c} r_{0,i}^k \\ r_{1,i}^k \end{array} \right] \end{equation}

Note that in the code, \(X\) and \(R\) are the same array x.

Then, the array b must be a 1D array with the following layout of elements:

[

b_{00,0}^0, b_{01,0}^0, b_{10,0}^0, b_{11,0}^0, b_{00,0}^1, b_{01,0}^1, b_{10,0}^1, b_{11,0}^1, b_{00,0}^2, b_{01,0}^2, b_{10,0}^2, b_{11,0}^2,

b_{00,1}^0, b_{01,1}^0, b_{10,1}^0, b_{11,1}^0, b_{00,1}^1, b_{01,1}^1, b_{10,1}^1, b_{11,1}^1, b_{00,1}^2, b_{01,1}^2, b_{10,1}^2, b_{11,1}^2,

...,

b_{00,n-1}^0, b_{01,n-1}^0, b_{10,n-1}^0, b_{11,n-1}^0, b_{00,n-1}^1, b_{01,n-1}^1, b_{10,n-1}^1, b_{11,n-1}^1, b_{00,n-1}^2, b_{01,n-1}^2, b_{10,n-1}^2, b_{11,n-1}^2

]

The arrays a and c are stored similarly.

The array corresponding to a vector (the solution and the right-hand-side x) must be a 1D array with the following layout of elements:

[

x_{0,0}^0, x_{1,0}^0, x_{0,0}^1, x_{1,0}^1,x_{0,0}^2, x_{1,0}^2,

x_{0,1}^0, x_{1,1}^0, x_{0,1}^1, x_{1,1}^1,x_{0,1}^2, x_{1,1}^2,

...,

x_{0,n-1}^0, x_{1,n-1}^0, x_{0,n-1}^1, x_{1,n-1}^1,x_{0,n-1}^2, x_{1,n-1}^2

]

Notes:

| a | Array containing the sub-diagonal elements |

| b | Array containing the diagonal elements |

| c | Array containing the super-diagonal elements |

| x | Right-hand side; will contain the solution on exit |

| n | Local size of the system on this processor (not multiplied by the block size) |

| ns | Number of systems to solve |

| bs | Block size |

| r | Object of type TridiagLU |

| m | MPI communicator |

Definition at line 88 of file blocktridiagIterJacobi.c.

| int tridiagScaLPK | ( | double * | a, |

| double * | b, | ||

| double * | c, | ||

| double * | x, | ||

| int | n, | ||

| int | ns, | ||

| void * | r, | ||

| void * | m | ||

| ) |

Solve tridiagonal (non-periodic) systems of equations using ScaLAPACK's pddtsv: This function can solve multiple independent systems with one call. The systems need not share the same left- or right-hand-sides.

Array layout: The arguments a, b, c, and x are local 1D arrays (containing this processor's part of the subdiagonal, diagonal, superdiagonal, and right-hand-side) of size (n X ns), where n is the local size of the system, and ns is the number of independent systems to solve. The ordering of the elements in these arrays is as follows:

For example, consider the following systems:

\begin{equation} \left[\begin{array}{ccccc} b_0^k & c_0^k & & & \\ a_1^k & b_1^k & c_1^k & & \\ & a_2^k & b_2^k & c_2^k & & \\ & & a_3^k & b_3^k & c_3^k & \\ & & & a_4^k & b_4^k & c_4^k \\ \end{array}\right] \left[\begin{array}{c} x_0^k \\ x_1^k \\ x_2^k \\ x_3^k \\ x_4^k \end{array}\right] = \left[\begin{array}{c} r_0^k \\ r_1^k \\ r_2^k \\ r_3^k \\ r_4^k \end{array}\right]; \ \ k= 1,\cdots,ns \end{equation}

and let \( ns = 3\). Note that in the code, \(x\) and \(r\) are the same array x.

Then, the array b must be a 1D array with the following layout of elements:

[

b_0^0, b_0^1, b_0^2, (diagonal element of the first row in each system)

b_1^0, b_1^1, b_1^2, (diagonal element of the second row in each system)

...,

b_{n-1}^0, b_{n-1}^1, b_{n-1}^2 (diagonal element of the last row in each system)

]

The arrays a, c, and x are stored similarly.

Notes:

| a | Array containing the sub-diagonal elements |

| b | Array containing the diagonal elements |

| c | Array containing the super-diagonal elements |

| x | Right-hand side; will contain the solution on exit |

| n | Local size of the system on this processor |

| ns | Number of systems to solve |

| r | Object of type TridiagLU |

| m | MPI communicator |

Definition at line 71 of file tridiagScaLPK.c.