77 double norm=0,norm0=0,global_norm=0;

80 MPI_Comm *comm = (MPI_Comm*) m;

84 MPI_Comm_size(*comm,&nproc);

85 MPI_Comm_rank(*comm,&rank);

93 fprintf(stderr,

"Error in tridiagIterJacobi(): NULL pointer passed for parameters!\n");

99 for (d=0; d<ns; d++) {

100 if (b[i*ns+d]*b[i*ns+d] < context->

atol*context->

atol) {

101 fprintf(stderr,

"Error in tridiagIterJacobi(): Encountered zero on main diagonal!\n");

107 double *rhs = (

double*) calloc (ns*n,

sizeof(

double));

108 for (i=0; i<n; i++) {

109 for (d=0; d<ns; d++) {

110 rhs[i*ns+d] = x[i*ns+d];

111 x[i*ns+d] /= b[i*ns+d];

115 double *recvbufL, *recvbufR, *sendbufL, *sendbufR;

116 recvbufL = (

double*) calloc (ns,

sizeof(

double));

117 recvbufR = (

double*) calloc (ns,

sizeof(

double));

118 sendbufL = (

double*) calloc (ns,

sizeof(

double));

119 sendbufR = (

double*) calloc (ns,

sizeof(

double));

126 if (context->

evaluate_norm) MPI_Allreduce(&n,&NT,1,MPI_INT,MPI_SUM,*comm);

131 if (context->

verbose) printf(

"\n");

133 if (context->

verbose && (!rank)) printf(

"\n");

140 if ( (iter >= context->

maxiter)

141 || (iter && context->

evaluate_norm && (global_norm < context->atol))

142 || (iter && context->

evaluate_norm && (global_norm/norm0 < context->rtol)) ) {

147 for (d=0; d<ns; d++) recvbufL[d] = recvbufR[d] = 0;

149 MPI_Request req[4] = {MPI_REQUEST_NULL,MPI_REQUEST_NULL,MPI_REQUEST_NULL,MPI_REQUEST_NULL};

150 if (rank) MPI_Irecv(recvbufL,ns,MPI_DOUBLE,rank-1,2,*comm,&req[0]);

151 if (rank != nproc-1) MPI_Irecv(recvbufR,ns,MPI_DOUBLE,rank+1,3,*comm,&req[1]);

152 for (d=0; d<ns; d++) { sendbufL[d] = x[d]; sendbufR[d] = x[(n-1)*ns+d]; }

153 if (rank) MPI_Isend(sendbufL,ns,MPI_DOUBLE,rank-1,3,*comm,&req[2]);

154 if (rank != nproc-1) MPI_Isend(sendbufR,ns,MPI_DOUBLE,rank+1,2,*comm,&req[3]);

160 for (i=1; i<n-1; i++) {

161 for (d=0; d<ns; d++) {

162 norm += ( (a[i*ns+d]*x[(i-1)*ns+d] + b[i*ns+d]*x[i*ns+d] + c[i*ns+d]*x[(i+1)*ns+d] - rhs[i*ns+d])

163 * (a[i*ns+d]*x[(i-1)*ns+d] + b[i*ns+d]*x[i*ns+d] + c[i*ns+d]*x[(i+1)*ns+d] - rhs[i*ns+d]) );

169 MPI_Waitall(4,req,MPI_STATUS_IGNORE);

173 for (d=0; d<ns; d++) {

174 norm += ( (a[d]*recvbufL[d] + b[d]*x[d] + c[d]*x[d+ns*1]- rhs[d])

175 * (a[d]*recvbufL[d] + b[d]*x[d] + c[d]*x[d+ns*1]- rhs[d]) );

177 for (d=0; d<ns; d++) {

178 norm += ( (a[d+ns*(n-1)]*x[d+ns*(n-2)] + b[d+ns*(n-1)]*x[d+ns*(n-1)] + c[d+ns*(n-1)]*recvbufR[d] - rhs[d+ns*(n-1)])

179 * (a[d+ns*(n-1)]*x[d+ns*(n-2)] + b[d+ns*(n-1)]*x[d+ns*(n-1)] + c[d+ns*(n-1)]*recvbufR[d] - rhs[d+ns*(n-1)]) );

182 for (d=0; d<ns; d++) {

183 norm += ( (a[d]*recvbufL[d] + b[d]*x[d] + c[d]*recvbufR[d] - rhs[d])

184 * (a[d]*recvbufL[d] + b[d]*x[d] + c[d]*recvbufR[d] - rhs[d]) );

191 MPI_Allreduce(&norm,&global_norm,1,MPI_DOUBLE,MPI_SUM,*comm);

193 global_norm = sqrt(global_norm/NT);

194 if (!iter) norm0 = global_norm;

203 if (context->

verbose && (!rank))

205 printf(

"\t\titer: %d, norm: %1.16E\n",iter,global_norm);

209 for (d=0; d<ns; d++) {

210 i = 0; x[i*ns+d] = (rhs[i*ns+d] - a[i*ns+d]*recvbufL[d] - c[i*ns+d]*x[d+ns*(i+1)] ) / b[i*ns+d];

211 i = n-1; x[i*ns+d] = (rhs[i*ns+d] - a[i*ns+d]*x[d+ns*(i-1)] - c[i*ns+d]*recvbufR[d]) / b[i*ns+d];

213 for (i=1; i<n-1; i++) {

214 for (d=0; d<ns; d++) {

215 x[i*ns+d] = (rhs[i*ns+d] - a[i*ns+d]*x[d+ns*(i-1)] - c[i*ns+d]*x[d+ns*(i+1)]) / b[i*ns+d];

219 for (d=0; d<ns; d++) x[d] = (rhs[d] - a[d]*recvbufL[d] - c[d]*recvbufR[d]) / b[d];

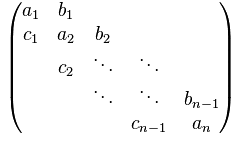

int tridiagIterJacobi(double *a, double *b, double *c, double *x, int n, int ns, void *r, void *m)

Header file for TridiagLU.