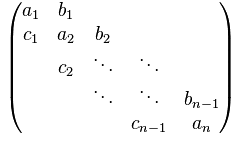

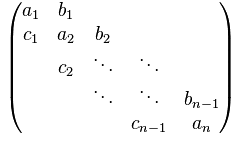

This function tests the implementation of a parallel (distributed memory) solver of block tridiagonal (non-periodic) systems of equations. Three tests are performed and the errors are reported:

1106 int i,j,k,d,ierr=0,nlocal;

1120 double *a2,*b2,*c2,*y;

1123 MPI_Comm_dup(MPI_COMM_WORLD,&world);

1126 ierr =

tridiagLUInit(&context,&world);

if (ierr)

return(ierr);

1137 MPI_Barrier(MPI_COMM_WORLD);

1144 a1 = (

double*) calloc (Ns*nlocal*bs*bs,

sizeof(

double));

1145 b1 = (

double*) calloc (Ns*nlocal*bs*bs,

sizeof(

double));

1146 c1 = (

double*) calloc (Ns*nlocal*bs*bs,

sizeof(

double));

1147 a2 = (

double*) calloc (Ns*nlocal*bs*bs,

sizeof(

double));

1148 b2 = (

double*) calloc (Ns*nlocal*bs*bs,

sizeof(

double));

1149 c2 = (

double*) calloc (Ns*nlocal*bs*bs,

sizeof(

double));

1150 x = (

double*) calloc (Ns*nlocal*bs ,

sizeof(

double));

1151 y = (

double*) calloc (Ns*nlocal*bs ,

sizeof(

double));

1157 MPI_Barrier(MPI_COMM_WORLD);

1159 #ifndef speed_test_only

1170 for (d = 0; d < Ns; d++) {

1171 for (i = 0; i < nlocal; i++) {

1172 for (j=0; j<bs; j++) {

1173 for (k=0; k<bs; k++) {

1174 a1[(i*Ns+d)*bs*bs+j*bs+k] = 0.0;

1175 if (j==k) b1[(i*Ns+d)*bs*bs+j*bs+k] = 1.0;

1176 else b1[(i*Ns+d)*bs*bs+j*bs+k] = 0.0;

1177 c1[(i*Ns+d)*bs*bs+j*bs+k] = 0.0;

1179 x [(i*Ns+d)*bs+j] = rand();

1193 if (!rank) printf(

"Block MPI test 1 ([I]x = b => x = b): \t");

1194 ierr = LUSolver(a1,b1,c1,x,nlocal,Ns,bs,&context,&world);

1195 if (ierr == -1) printf(

"Error - system is singular on process %d\t",rank);

1201 if (!rank) printf(

"error=%E\n",error);

1202 MPI_Barrier(MPI_COMM_WORLD);

1213 for (d = 0; d < Ns; d++) {

1214 for (i = 0; i < nlocal; i++) {

1215 for (j=0; j<bs; j++) {

1216 for (k=0; k<bs; k++) {

1217 a1[(i*Ns+d)*bs*bs+j*bs+k] = 0.0;

1218 if (j==k) b1[(i*Ns+d)*bs*bs+j*bs+k] = 400.0 + ((

double) rand()) / ((

double) RAND_MAX);

1219 else b1[(i*Ns+d)*bs*bs+j*bs+k] = 100.0 + ((

double) rand()) / ((

double) RAND_MAX);

1220 if (rank == nproc-1) c1[(i*Ns+d)*bs*bs+j*bs+k] = (i == nlocal-1 ? 0 : ((

double) rand()) / ((

double) RAND_MAX));

1221 else c1[(i*Ns+d)*bs*bs+j*bs+k] = ((

double) rand()) / ((

double) RAND_MAX);

1223 x[(i*Ns+d)*bs+j] = ((

double) rand()) / ((

double) RAND_MAX);

1237 if (!rank) printf("Block MPI test 2 ([U]x = b => x = [U]^(-1)b):\t");

1238 ierr = LUSolver(a1,b1,c1,x,nlocal,Ns,bs,&context,&world);

1239 if (ierr == -1) printf("Error - system is singular on process %d\t",rank);

1245 if (!rank) printf("error=%E\n",error);

1246 MPI_Barrier(MPI_COMM_WORLD);

1258 for (d = 0; d < Ns; d++) {

1259 for (i = 0; i < nlocal; i++) {

1260 for (j=0; j<bs; j++) {

1261 for (k=0; k<bs; k++) {

1262 if (!rank ) a1[(i*Ns+d)*bs*bs+j*bs+k] = (i == 0 ? 0.0 : ((

double) rand()) / ((

double) RAND_MAX));

1263 else a1[(i*Ns+d)*bs*bs+j*bs+k] = ((

double) rand()) / ((

double) RAND_MAX);

1264 if (j==k) b1[(i*Ns+d)*bs*bs+j*bs+k] = 200.0*(1.0 + ((

double) rand()) / ((

double) RAND_MAX));

1265 else b1[(i*Ns+d)*bs*bs+j*bs+k] = 100.0*(1.0 + ((

double) rand()) / ((

double) RAND_MAX));

1266 if (rank == nproc-1) c1[(i*Ns+d)*bs*bs+j*bs+k] = (i == nlocal-1 ? 0 : ((

double) rand()) / ((

double) RAND_MAX));

1267 else c1[(i*Ns+d)*bs*bs+j*bs+k] = ((

double) rand()) / ((

double) RAND_MAX);

1269 x[(i*Ns+d)*bs+j] = ((

double) rand()) / ((

double) RAND_MAX);

1283 if (!rank) printf("Block MPI test 3 ([A]x = b => x = [A]^(-1)b):\t");

1284 ierr = LUSolver(a1,b1,c1,x,nlocal,Ns,bs,&context,&world);

1285 if (ierr == -1) printf("Error - system is singular on process %d\t",rank);

1291 if (!rank) printf("error=%E\n",error);

1292 MPI_Barrier(MPI_COMM_WORLD);

1294 if (!rank) printf(

"Skipping accuracy tests. Remove -Dspeed_test_only compilation flag to enable.\n");

1313 for (d = 0; d < Ns; d++) {

1314 for (i = 0; i < nlocal; i++) {

1315 for (j=0; j<bs; j++) {

1316 for (k=0; k<bs; k++) {

1317 if (!rank ) a1[(i*Ns+d)*bs*bs+j*bs+k] = (i == 0 ? 0.0 : ((

double) rand()) / ((double) RAND_MAX));

1318 else a1[(i*Ns+d)*bs*bs+j*bs+k] = ((

double) rand()) / ((

double) RAND_MAX);

1319 if (j==k) b1[(i*Ns+d)*bs*bs+j*bs+k] = 200.0*(1.0 + ((

double) rand()) / ((

double) RAND_MAX));

1320 else b1[(i*Ns+d)*bs*bs+j*bs+k] = 100.0*(1.0 + ((

double) rand()) / ((

double) RAND_MAX));

1321 if (rank == nproc-1) c1[(i*Ns+d)*bs*bs+j*bs+k] = (i == nlocal-1 ? 0 : ((

double) rand()) / ((

double) RAND_MAX));

1322 else c1[(i*Ns+d)*bs*bs+j*bs+k] = ((

double) rand()) / ((

double) RAND_MAX);

1324 x[(i*Ns+d)*bs+j] = ((

double) rand()) / ((

double) RAND_MAX);

1338 printf("\nBlock MPI test 4 (Speed test - %d Tridiagonal Solves):\n",NRuns);

1339 double runtimes[5] = {0.0,0.0,0.0,0.0,0.0};

1344 for (i = 0; i < NRuns; i++) {

1351 MPI_Barrier(MPI_COMM_WORLD);

1352 ierr = LUSolver(a1,b1,c1,x,nlocal,Ns,bs,&context,&world);

1353 MPI_Barrier(MPI_COMM_WORLD);

1357 runtimes[0] += context.total_time;

1358 runtimes[1] += context.stage1_time;

1359 runtimes[2] += context.stage2_time;

1360 runtimes[3] += context.stage3_time;

1361 runtimes[4] += context.stage4_time;

1362 if (ierr == -1) printf(

"Error - system is singular on process %d\t",rank);

1370 MPI_Allreduce(MPI_IN_PLACE,&runtimes[0],5,MPI_DOUBLE,MPI_MAX,MPI_COMM_WORLD);

1373 if (ierr == -1) printf(

"Error - system is singular on process %d\t",rank);

1375 printf(

"\t\tTotal walltime = %E\n",runtimes[0]);

1376 printf(

"\t\tStage1 walltime = %E\n",runtimes[1]);

1377 printf(

"\t\tStage2 walltime = %E\n",runtimes[2]);

1378 printf(

"\t\tStage3 walltime = %E\n",runtimes[3]);

1379 printf(

"\t\tStage4 walltime = %E\n",runtimes[4]);

1380 printf(

"\t\tAverage error = %E\n",error);

1382 out = fopen(filename,

"w");

1383 fprintf(out,

"%5d %1.16E %1.16E %1.16E %1.16E %1.16E %1.16E\n",nproc,runtimes[0],

1384 runtimes[1],runtimes[2],runtimes[3],runtimes[4],error);

1387 MPI_Barrier(MPI_COMM_WORLD);

1404 MPI_Comm_free(&world);

static void CopyArraySimple(double *, double *, int)

static double CalculateErrorBlock(double *, double *, double *, double *, double *, int, int, int, int, int)

int tridiagLUInit(void *, void *)

static int partition1D(int, int, int, int *)