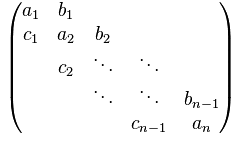

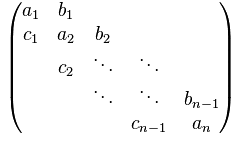

Solve block tridiagonal (non-periodic) systems of equations using parallel LU decomposition: This function can solve multiple independent systems with one call. The systems need not share the same left- or right-hand-sides. The iterative substructuring method is used in this function that can be briefly described through the following 4 stages:

- Stage 1: Parallel elimination of the tridiagonal blocks on each processor comprising all points of the subdomain except the 1st point (unless its the 1st global point, i.e., a physical boundary)

- Stage 2: Elimination of the 1st row on each processor (except the 1st processor) using the last row of the previous processor.

- Stage 3: Solution of the reduced tridiagonal system that represents the coupling of the system across the processors, using blocktridiagIterJacobi() in this implementation.

- Stage 4: Backward-solve to obtain the final solution

Specific details of the method implemented here are available in:

- Ghosh, D., Constantinescu, E. M., Brown, J., "Scalable Nonlinear Compact Schemes", Technical Memorandum, ANL/MCS-TM-340, Argonne National Laboratory, April 2014, (http://www.mcs.anl.gov/publication/scalable-nonlinear-compact-schemes) (also available at http://debog.github.io/Files/2014_Ghosh_Consta_Brown_MCSTR340.pdf).

- Ghosh, D., Constantinescu, E. M., Brown, J., Efficient Implementation of Nonlinear Compact Schemes on Massively Parallel Platforms, SIAM Journal on Scientific Computing, 37 (3), 2015, C354–C383 (http://dx.doi.org/10.1137/140989261).

More references on this class of parallel tridiagonal solvers:

- E. Polizzi and A. H. Sameh, "A parallel hybrid banded system solver: The SPIKE algorithm", Parallel Comput., 32 (2006), pp. 177–194.

- E. Polizzi and A. H. Sameh, "SPIKE: A parallel environment for solving banded linear systems", Comput. & Fluids, 36 (2007), pp. 113–120.

Array layout: The arguments a, b, and c are local 1D arrays (containing this processor's part of the subdiagonal, diagonal, and superdiagonal) of size (n X ns X bs^2), and x is a local 1D array (containing this processor's part of the right-hand-side, and will contain the solution on exit) of size (n X ns X bs), where n is the local size of the system, ns is the number of independent systems to solve, and bs is the block size. The ordering of the elements in these arrays is as follows:

- Each block is stored in the row-major format.

- Blocks of the same row for each of the independent systems are stored adjacent to each other.

For example, consider the following systems:

\begin{equation} \left[\begin{array}{ccccc} B_0^k & C_0^k & & & \\ A_1^k & B_1^k & C_1^k & & \\ & A_2^k & B_2^k & C_2^k & & \\ & & A_3^k & B_3^k & C_3^k & \\ & & & A_4^k & B_4^k & C_4^k \\ \end{array}\right] \left[\begin{array}{c} X_0^k \\ X_1^k \\ X_2^k \\ X_3^k \\ X_4^k \end{array}\right] = \left[\begin{array}{c} R_0^k \\ R_1^k \\ R_2^k \\ R_3^k \\ R_4^k \end{array}\right]; \ \ k= 1,\cdots,ns \end{equation}

where \(A\), \(B\), and \(C\) are matrices of size bs = 2 (say), and let \( ns = 3\). In the equation above, we have

\begin{equation} B_i^k = \left[\begin{array}{cc} b_{00,i}^k & b_{01,i}^k \\ b_{10,i}^k & b_{11,i}^k \end{array}\right], X_i^k = \left[\begin{array}{c} x_{0,i}^k \\ x_{1,i}^k \end{array} \right], R_i^k = \left[\begin{array}{c} r_{0,i}^k \\ r_{1,i}^k \end{array} \right] \end{equation}

Note that in the code, \(X\) and \(R\) are the same array x.

Then, the array b must be a 1D array with the following layout of elements:

[

b_{00,0}^0, b_{01,0}^0, b_{10,0}^0, b_{11,0}^0, b_{00,0}^1, b_{01,0}^1, b_{10,0}^1, b_{11,0}^1, b_{00,0}^2, b_{01,0}^2, b_{10,0}^2, b_{11,0}^2,

b_{00,1}^0, b_{01,1}^0, b_{10,1}^0, b_{11,1}^0, b_{00,1}^1, b_{01,1}^1, b_{10,1}^1, b_{11,1}^1, b_{00,1}^2, b_{01,1}^2, b_{10,1}^2, b_{11,1}^2,

...,

b_{00,n-1}^0, b_{01,n-1}^0, b_{10,n-1}^0, b_{11,n-1}^0, b_{00,n-1}^1, b_{01,n-1}^1, b_{10,n-1}^1, b_{11,n-1}^1, b_{00,n-1}^2, b_{01,n-1}^2, b_{10,n-1}^2, b_{11,n-1}^2

]

The arrays a and c are stored similarly.

The array corresponding to a vector (the solution and the right-hand-side x) must be a 1D array with the following layout of elements:

[

x_{0,0}^0, x_{1,0}^0, x_{0,0}^1, x_{1,0}^1,x_{0,0}^2, x_{1,0}^2,

x_{0,1}^0, x_{1,1}^0, x_{0,1}^1, x_{1,1}^1,x_{0,1}^2, x_{1,1}^2,

...,

x_{0,n-1}^0, x_{1,n-1}^0, x_{0,n-1}^1, x_{1,n-1}^1,x_{0,n-1}^2, x_{1,n-1}^2

]

Notes:

- This function does not preserve the sub-diagonal, diagonal, super-diagonal elements and the right-hand-sides.

- The input array x contains the right-hand-side on entering the function, and the solution on exiting it.

- Parameters

-

| a | Array containing the sub-diagonal elements |

| b | Array containing the diagonal elements |

| c | Array containing the super-diagonal elements |

| x | Right-hand side; will contain the solution on exit |

| n | Local size of the system on this processor (not multiplied by the block size) |

| ns | Number of systems to solve |

| bs | Block size |

| r | Object of type TridiagLU (contains wall times at exit) |

| m | MPI communicator |

Definition at line 107 of file blocktridiagLU.c.

121 int d,i,j,istart,iend,size;

122 int rank,nproc,bs2=bs*bs,nsbs=ns*bs;

123 struct timeval start,stage1,stage2,stage3,stage4;

129 MPI_Comm *comm = (MPI_Comm*) m;

134 MPI_Comm_size(*comm,&nproc);

135 MPI_Comm_rank(*comm,&rank);

143 fprintf(stderr,

"Error in tridiagLU(): NULL pointer passed for parameters.\n");

148 gettimeofday(&start,NULL);

150 if ((ns == 0) || (n == 0) || (bs == 0))

return(0);

152 xs1 = (

double*) calloc (nsbs,

sizeof(

double));

153 xp1 = (

double*) calloc (nsbs,

sizeof(

double));

154 for (i=0; i<nsbs; i++) xs1[i] = xp1[i] = 0;

157 istart = (rank == 0 ? 1 : 2);

159 for (i = istart; i < iend; i++) {

160 double binv[bs2], factor[bs2];

161 for (d = 0; d < ns; d++) {

179 gettimeofday(&stage1,NULL);

184 double *sendbuf, *recvbuf;

185 size = ns*bs2*(nvar-1)+nsbs;

186 sendbuf = (

double*) calloc (size,

sizeof(

double));

187 recvbuf = (

double*) calloc (size,

sizeof(

double));

188 for (d=0; d<ns; d++) {

189 for (i=0; i<bs2; i++) {

190 sendbuf[(0*ns+d)*bs2+i] = a[((n-1)*ns+d)*bs2+i];

191 sendbuf[(1*ns+d)*bs2+i] = b[((n-1)*ns+d)*bs2+i];

192 sendbuf[(2*ns+d)*bs2+i] = c[((n-1)*ns+d)*bs2+i];

194 for (i=0; i<bs; i++) sendbuf[3*ns*bs2+d*bs+i] = x[((n-1)*ns+d)*bs+i];

197 MPI_Request req[2] = {MPI_REQUEST_NULL,MPI_REQUEST_NULL};

198 if (rank) MPI_Irecv(recvbuf,size,MPI_DOUBLE,rank-1,1436,*comm,&req[0]);

199 if (rank != nproc-1) MPI_Isend(sendbuf,size,MPI_DOUBLE,rank+1,1436,*comm,&req[1]);

200 MPI_Waitall(2,&req[0],MPI_STATUS_IGNORE);

204 for (d = 0; d < ns; d++) {

205 double am1[bs2], bm1[bs2], cm1[bs2], xm1[bs];

206 for (i=0; i<bs2; i++) {

207 am1[i] = recvbuf[(0*ns+d)*bs2+i];

208 bm1[i] = recvbuf[(1*ns+d)*bs2+i];

209 cm1[i] = recvbuf[(2*ns+d)*bs2+i];

211 for (i=0; i<bs; i++) xm1[i] = recvbuf[3*ns*bs2+d*bs+i];

212 double factor[bs2], binv[bs2];

220 _MatrixInvert_ (b+((n-1)*ns+d)*bs2,binv,bs);

if (ierr)

return(ierr);

233 gettimeofday(&stage2,NULL);

239 zero = (

double*) calloc (ns*bs2,

sizeof(

double));

240 eye = (

double*) calloc (ns*bs2,

sizeof(

double));

241 for (d=0; d<ns*bs2; d++) zero[d] = eye[d] = 0.0;

242 for (d=0; d<ns; d++) {

243 for (i=0; i<bs; i++) eye[d*bs2+(i*bs+i)] = 1.0;

248 fprintf(stderr,

"Error in blocktridiagLU(): Gather-and-solve for reduced system not available.\n");

259 MPI_Request req[2] = {MPI_REQUEST_NULL,MPI_REQUEST_NULL};

260 for (d=0; d<nsbs; d++) xs1[d] = x[d];

261 if (rank+1 < nproc) MPI_Irecv(xp1,nsbs,MPI_DOUBLE,rank+1,1323,*comm,&req[0]);

262 if (rank) MPI_Isend(xs1,nsbs,MPI_DOUBLE,rank-1,1323,*comm,&req[1]);

263 MPI_Waitall(2,&req[0],MPI_STATUS_IGNORE);

267 fprintf(stderr,

"Error: nproc > 1 for a serial run!\n");

272 gettimeofday(&stage3,NULL);

276 iend = (rank == 0 ? 0 : 1);

278 for (d = 0; d < ns; d++) {

279 double binv[bs2],xt[bs];

284 for (j=0; j<bs; j++) x[(istart*ns+d)*bs+j]=xt[j];

286 for (i = istart-1; i > iend-1; i--) {

287 for (d = 0; d < ns; d++) {

288 double binv[bs2],xt[bs];

293 for (j=0; j<bs; j++) x[(i*ns+d)*bs+j] = xt[j];

298 gettimeofday(&stage4,NULL);

306 walltime = ((stage1.tv_sec * 1000000 + stage1.tv_usec) - (start.tv_sec * 1000000 + start.tv_usec));

307 params->

stage1_time = (double) walltime / 1000000.0;

308 walltime = ((stage2.tv_sec * 1000000 + stage2.tv_usec) - (stage1.tv_sec * 1000000 + stage1.tv_usec));

309 params->

stage2_time = (double) walltime / 1000000.0;

310 walltime = ((stage3.tv_sec * 1000000 + stage3.tv_usec) - (stage2.tv_sec * 1000000 + stage2.tv_usec));

311 params->

stage3_time = (double) walltime / 1000000.0;

312 walltime = ((stage4.tv_sec * 1000000 + stage4.tv_usec) - (stage3.tv_sec * 1000000 + stage3.tv_usec));

313 params->

stage4_time = (double) walltime / 1000000.0;

314 walltime = ((stage4.tv_sec * 1000000 + stage4.tv_usec) - (start.tv_sec * 1000000 + start.tv_usec));

315 params->

total_time = (double) walltime / 1000000.0;

#define _MatrixMultiplySubtract_(C, A, B, N)

#define _MatrixMultiply_(A, B, C, N)

#define _MatrixZero_(A, N)

char reducedsolvetype[50]

int blocktridiagIterJacobi(double *, double *, double *, double *, int, int, int, void *, void *)

#define _MatVecMultiplySubtract_(y, A, x, N)

#define _MatVecMultiply_(A, x, y, N)

#define _MatrixInvert_(A, B, N)