|

TridiagLU

1.0

Scalable, parallel solver for tridiagonal system of equations

|

|

TridiagLU

1.0

Scalable, parallel solver for tridiagonal system of equations

|

Solve a block tridiagonal system with the Jacobi method. More...

#include <stdlib.h>#include <stdio.h>#include <math.h>#include <mpi.h>#include <tridiagLU.h>#include <matops.h>Go to the source code of this file.

Functions | |

| int | blocktridiagIterJacobi (double *a, double *b, double *c, double *x, int n, int ns, int bs, void *r, void *m) |

Solve a block tridiagonal system with the Jacobi method.

Definition in file blocktridiagIterJacobi.c.

| int blocktridiagIterJacobi | ( | double * | a, |

| double * | b, | ||

| double * | c, | ||

| double * | x, | ||

| int | n, | ||

| int | ns, | ||

| int | bs, | ||

| void * | r, | ||

| void * | m | ||

| ) |

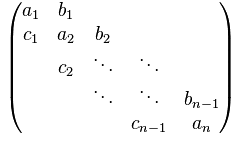

Solve block tridiagonal (non-periodic) systems of equations using point Jacobi iterations: This function can solve multiple independent systems with one call. The systems need not share the same left- or right-hand-sides. The initial guess is taken as the solution of

\begin{equation} {\rm diag}\left[{\bf b}\right]{\bf x} = {\bf r} \end{equation}

where \({\bf b}\) represents the diagonal elements of the tridiagonal system, and \({\bf r}\) is the right-hand-side, stored in \({\bf x}\) at the start of this function.

Array layout: The arguments a, b, and c are local 1D arrays (containing this processor's part of the subdiagonal, diagonal, and superdiagonal) of size (n X ns X bs^2), and x is a local 1D array (containing this processor's part of the right-hand-side, and will contain the solution on exit) of size (n X ns X bs), where n is the local size of the system, ns is the number of independent systems to solve, and bs is the block size. The ordering of the elements in these arrays is as follows:

For example, consider the following systems:

\begin{equation} \left[\begin{array}{ccccc} B_0^k & C_0^k & & & \\ A_1^k & B_1^k & C_1^k & & \\ & A_2^k & B_2^k & C_2^k & & \\ & & A_3^k & B_3^k & C_3^k & \\ & & & A_4^k & B_4^k & C_4^k \\ \end{array}\right] \left[\begin{array}{c} X_0^k \\ X_1^k \\ X_2^k \\ X_3^k \\ X_4^k \end{array}\right] = \left[\begin{array}{c} R_0^k \\ R_1^k \\ R_2^k \\ R_3^k \\ R_4^k \end{array}\right]; \ \ k= 1,\cdots,ns \end{equation}

where \(A\), \(B\), and \(C\) are matrices of size bs = 2 (say), and let \( ns = 3\). In the equation above, we have

\begin{equation} B_i^k = \left[\begin{array}{cc} b_{00,i}^k & b_{01,i}^k \\ b_{10,i}^k & b_{11,i}^k \end{array}\right], X_i^k = \left[\begin{array}{c} x_{0,i}^k \\ x_{1,i}^k \end{array} \right], R_i^k = \left[\begin{array}{c} r_{0,i}^k \\ r_{1,i}^k \end{array} \right] \end{equation}

Note that in the code, \(X\) and \(R\) are the same array x.

Then, the array b must be a 1D array with the following layout of elements:

[

b_{00,0}^0, b_{01,0}^0, b_{10,0}^0, b_{11,0}^0, b_{00,0}^1, b_{01,0}^1, b_{10,0}^1, b_{11,0}^1, b_{00,0}^2, b_{01,0}^2, b_{10,0}^2, b_{11,0}^2,

b_{00,1}^0, b_{01,1}^0, b_{10,1}^0, b_{11,1}^0, b_{00,1}^1, b_{01,1}^1, b_{10,1}^1, b_{11,1}^1, b_{00,1}^2, b_{01,1}^2, b_{10,1}^2, b_{11,1}^2,

...,

b_{00,n-1}^0, b_{01,n-1}^0, b_{10,n-1}^0, b_{11,n-1}^0, b_{00,n-1}^1, b_{01,n-1}^1, b_{10,n-1}^1, b_{11,n-1}^1, b_{00,n-1}^2, b_{01,n-1}^2, b_{10,n-1}^2, b_{11,n-1}^2

]

The arrays a and c are stored similarly.

The array corresponding to a vector (the solution and the right-hand-side x) must be a 1D array with the following layout of elements:

[

x_{0,0}^0, x_{1,0}^0, x_{0,0}^1, x_{1,0}^1,x_{0,0}^2, x_{1,0}^2,

x_{0,1}^0, x_{1,1}^0, x_{0,1}^1, x_{1,1}^1,x_{0,1}^2, x_{1,1}^2,

...,

x_{0,n-1}^0, x_{1,n-1}^0, x_{0,n-1}^1, x_{1,n-1}^1,x_{0,n-1}^2, x_{1,n-1}^2

]

Notes:

| a | Array containing the sub-diagonal elements |

| b | Array containing the diagonal elements |

| c | Array containing the super-diagonal elements |

| x | Right-hand side; will contain the solution on exit |

| n | Local size of the system on this processor (not multiplied by the block size) |

| ns | Number of systems to solve |

| bs | Block size |

| r | Object of type TridiagLU |

| m | MPI communicator |

Definition at line 88 of file blocktridiagIterJacobi.c.